2. Introduction

2.1 Ontology Requirement Specification Document

| Privacy Paradigm ODRL Profile - PPOP | |

|---|---|

| 1. Purpose | |

| The purpose of this profile is to support the specification of transparency measures in the context of data sharing activities and data-intensive flows between multiple data subjects, controllers and processors on decentralised data storage environments. | |

| 2. Scope | |

| The scope of this profile is limited to the definition of data sharing policies aligned with European data protection regulations and with ethical guidelines related to the transparency of Artificial Intelligence. | |

| 3. Implementation Language | |

| RDF, RDFS | |

| 4. Intended End-Users | |

| Developers of decentralised data storage and sharing solutions | |

| 5. Intended Uses | |

|

Use 1 - Classify transparency practices of a PIMS Use 2 - Define access control policies for legal and ethical access to group and individual personal data stores Use 3 - Model agreements / contracts between data subjects and intermediaries in new data governance schemes Use 4 - Model safeguards for the trustworthiness of AI systems and respective rights and duties Use 5 - Create a machine and human-readable policy notice template |

|

| 6. Ontology Requirements | |

| a. Non-Functional Requirements | |

| NFR 1. The ontology shall be published online with standard documentation. | |

| b. Functional Requirements: Groups of Competency Questions | |

| Related to Safeguards | |

| Trustworthiness / Reliability | CQTS1 - To what extent did you ensure that the system would function reliably under harsh conditions? |

| Safety | CQSF1 - What considerations did you take to prioritise the safety and the mental and physical integrity of people when scanning horizons of technological possibility and when conceiving of and deploying AI applications? |

| Security |

CQSC1 - What strategies did you establish to ensure that the system continuously remains functional and accessible to its authorised users? CQSC2 - What protocols did you use to keep confidential and private information secure even under hostile or adversarial conditions? CQSC3 - To what extent is the system capable of maintaining the integrity of the information that constitutes it, including protecting its architectures from the unauthorised modification or damage of any of its component parts? |

| Privacy | CQPR1 - What measures did you take to enhance privacy? |

| Explainability |

CQEX1 - What mechanism did you consider to provide explanation and justification of both the content of algorithmically supported decisions and the processes behind their production in plain, understandable, and coherent language? Did you research and try to use the simplest and most interpretable model possible for the application in question? CQEX2 - What considerations were taken into account when the rationale behind a specific decision or behaviour was communicated and clarified? Did you establish mechanisms to inform (end-)users on the reasons and criteria behind the AI system's outcomes? CQEX3 - What strategies did you use to provide a formal or logical explanation? CQEX4 - What strategies did you use to provide a semantic explanation (explanation of technical rationale behind the outcome)? |

| Traceability |

CQTR1 - What measures can ensure traceability of outcomes and decisions? CQTR2 - What methods are used to ensure traceability of designing and developing algorithmic systems trained by personal data? |

| Auditability | CQAU1 - What measures did you take to ensure that every step of the process of designing and implementing AI is accessible for audit, oversight, and review? Did builders and implementers of algorithmic systems keep records and make accessible information that enable monitoring from the stages of collection, pre-processing, and modelling to training, testing, and deploying? |

| Avoid Bias |

CQFR1 - How to ensure that the system has been sufficiently trained to develop and implement responsibility without bias? CQFR2 - Did you ensure that model architectures did not include target variables, features, processes, or analytical structures (correlations, interactions, and inferences) which are unreasonable, morally objectionable, or unjustifiable)? CQFR3 - What considerations were taken into account to encourage all voices to be heard and all opinions to be weighed seriously and sincerely throughout the production and use lifecycle? |

| Transparency |

CQTP1 - What considerations were taken into account when considering the transparency of an AI system? CQTP2 - If data was collected from the data subject, has the controller provided the data subject with the mandatory information? CQTP3 - If data wasn't collected from the data subject, has the controller provided the data subject with the mandatory information? CQTP4 - Is it the first time that the data subject is contacted? CQTP5 - Is there any applicable exemption to the information obligation? CQTP6 - Has the user of data intermediary services been provided with the mandatory information? CQTP7 - Has the user of a mere conduit service been provided with information about the restrictions on the service? CQTP8 - Were the purposes associated with each particular category of personal data informed? CQTP9 - Was the particular legitimate interest associated with each particular category of personal data informed? CQTP10 - Were the data recipients associated with each particular category of personal data informed? CQTP11 - Were the reasons for the data transfer associated with each particular category of personal data informed? CQTP12 - Were the data retention periods associated with each particular category of personal data informed? CQTP13 - Were the conditions and restrictions related to the use of the service informed? |

| Related to Rights | |

| Non-discrimination | CQDS1 - How to ensure that the decisions of the system do not have discriminatory or inequitable impacts on the lives of the people they affect? |

| Autonomy / Informed Decisions | CQAT1 - How to ensure that the users are able to make free and informed decisions (in interaction with a system)? |

| Right to Privacy | CQRP1 - Did you build in mechanisms for notice and control over personal data? |

| Related to Duties | |

| Accuracy |

CQAC1 - Did you ensure that the system generates a correct output? CQAC2 - Did you assess whether you can analyse your training and testing data? can you change and update this over time? |

| Other CQs | |

| Intended Purpose | CQPP1 - Did you clarify the purpose of the AI system and who or what may benefit from the product/service? |

| Accountability | CQRE1 - How to establish a continuous chain of human responsibility across the whole AI projects delivery flow: from the design of an AI system to its algorithmically steered outcomes? |

| Impacts on business | CQBU1 - If the organisation's business model relies on personal data, where does the data come from to create value for the organisation? |

| Well-being | CQWE1 - To what extend did you ensure that the use of technology fosters and cultivates the welfare and well-being of data subjects whose interests are impacted by its use? |

| Informed data subjects | CQIN1 - Did you enable people to understand how an AI system is developed, trained, operates, and deployed in the relevant application domain, so that consumers, for example, can make more informed choices? |

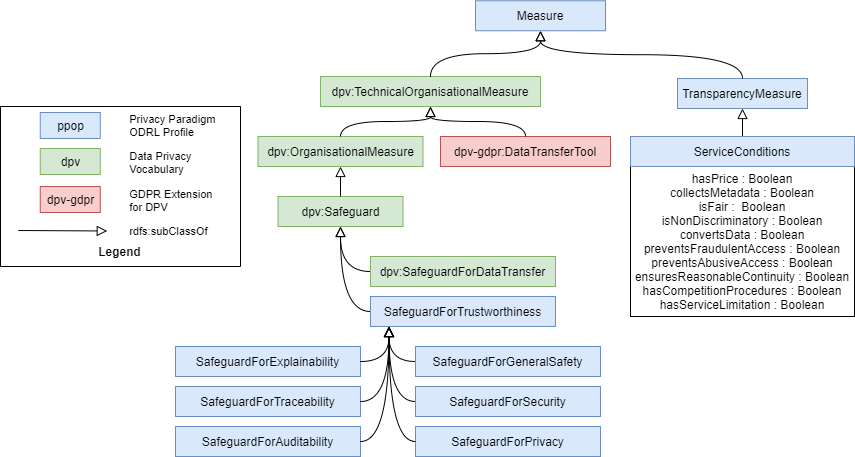

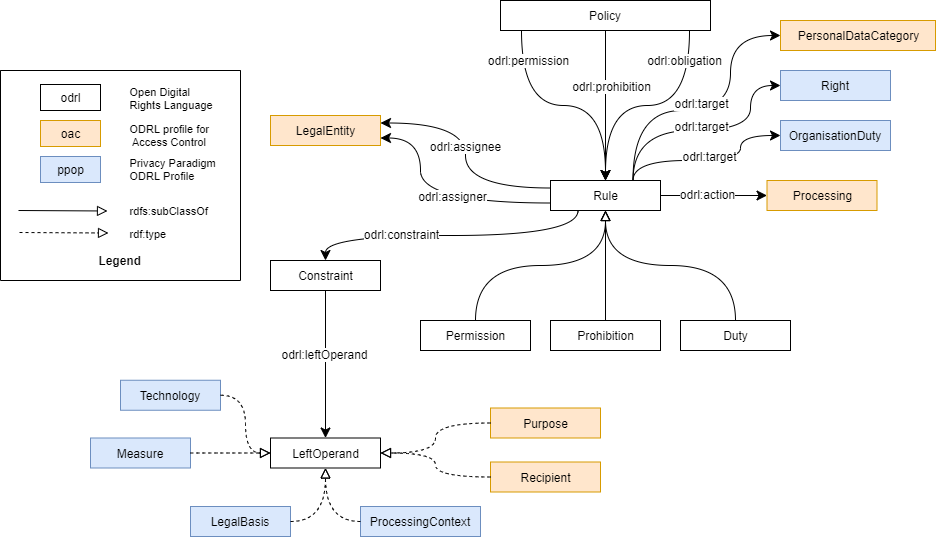

2.2 Profile diagram

The base concepts specified by the Privacy Paradigm ODRL Profile are shown in the figure below.

2.3 Document Conventions

| Prefix | Namespace | Description |

|---|---|---|

| odrl | http://www.w3.org/ns/odrl/2/ | [odrl-vocab] [odrl-model] |

| rdf | http://www.w3.org/1999/02/22-rdf-syntax-ns# | [rdf11-concepts] |

| rdfs | http://www.w3.org/2000/01/rdf-schema# | [rdf-schema] |

| owl | http://www.w3.org/2002/07/owl# | [owl2-overview] |

| dct | http://purl.org/dc/terms/ | [dct] |

| ns1 | http://purl.org/vocab/vann/ | [ns1] |

| xsd | http://www.w3.org/2001/XMLSchema# | [xsd] |

| skos | http://www.w3.org/2004/02/skos/core# | [skos] |

| dpv | http://www.w3.org/ns/dpv# | [dpv] |

| ppop | https://w3id.org/ppop | [ppop] |